Moving bits around: Automate Deployment Server Administration with GitHub Actions

Planning a sequel to the blog – Moving bits around: Deploying Splunk Apps with Github Actions – led me to an interesting experiment. What if we could manage and automate the deployment server the same way, without having to log on to the server at all. After all, the deployment server is just a bunch of app directories and a serverclass.conf file.

In the first part of this two-part blog series linked above, we looked at managing the apps available in the deployment-apps directory of deployment server straight from a GitHub repository. In addition, I also wrote an addendum to discuss how to leverage a native feature in Splunk to manage those apps from a deployment server that are to be staged in a cluster manager or a search head cluster deployer to be further deployed to indexers and search head cluster members respectively. Check that out here Splunk Deployment Server: The Manager of Managers.

Let’s now incorporate the knowledge from both these blogs to up the ante and try to stage the apps as well as administer the serverclass.conf that determines what apps go to which deployment clients. I am going to use the same setup I used in the original blog post. So if you’d like a refresher, I recommend glossing over that one before reading on. Here we will just be enhancing those capabilities with additional configurations to achieve the goal.

GitHub Actions FTW Again!

So far we have covered what essentially constitutes a deployment server which is a bunch of serverclasses that band together a set of specific apps to a set of specific clients. Now let’s look at how to manage a serverclass.conf from the GitHub repository, test-deploy-ds, where we are hosting the deployment-apps.

Recall that in the previous blog, although I created a dedicated user ghuser for authenticating to the deployment server, I also mentioned you could just as easily leverage the user splunk, that owns the splunk processes on the deployment server, the same way. In fact I do recommend using splunk as the user in this scenario – insofar as splunk is a non-root user in the deployment server – to avoid having to modify permissions to the serverclass.conf file.

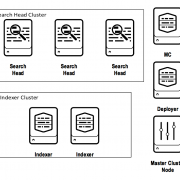

Additionally, since the only difference between how this works for a cluster manager vs. a deployer is really the repositoryLocation attribute in the deploymentclient.conf or targetRepositoryLocation attribute in the serverclass.conf depending on which approach you choose, I skipped including a deployer in the test setup below.

Before we go ahead and make any changes, let’s do a checklist quickly to establish a common baseline. It is assumed that

- You have a repo,

test-deploy-dsin the existing setup, that has adeployment-appsdirectory with all the apps in it. - You have a workflow action YAML file,

push2ds.ymlin the existing setup, defined under.github/workflows/directory of the repo. - You have generated an SSH key for the user

splunkand added the public key to/home/splunk/.ssh/authorized_keysin the deployment server and can SSH into it with the private key of that user. - You have updated the private key, deployment server host,

splunkusername in the GitHub Secrets - Finally, any new check-ins to the repo successfully triggers and executes the GitHub action workflow without errors

Firstly the serverclass.conf needs to be sync-ed to the location $SPLUNK_HOME/etc/system/local of the deployment server. So we add an additional step to the push2ds.yml like so.

Note that we are using the same marketplace action Burnett01/rsync-deployments@4.1. We invoke it once again with the only variables that changed here being the path and remote_path attributes. in the second step, the variable path is the root directory in the repo where I placed the serverclass.conf (you could very well put it in, say, a “configs” directory and update the path accordingly) and remote_path will be the $SPLUNK_HOME/etc/system/local directory in the deployment server.

Now let’s create a serverclass.conf and define a couple of serverclasses, one for the cluster manager and another for the universal forwarders. You could then attach a few apps from the deployment-apps directory to either the cluster manager or the universal forwarders like so.

# serverclass.conf

[serverClass:test-githubaction-cm]

whitelist.0 = cm.*

[serverClass:test-githubaction-uf]

whitelist.0 = uf.*

[serverClass:test-githubaction-cm:app:org_all_app_props]

restartSplunkd = false

stateOnClient = noop

[serverClass:test-githubaction-cm:app:org_all_indexes]

restartSplunkd = false

stateOnClient = noop

[serverClass:test-githubaction-cm:app:org_all_indexer_base]

restartSplunkd = false

stateOnClient = noop

[serverClass:test-githubaction-cm:app:org_indexer_volume_indexes]

restartSplunkd = false

stateOnClient = noop

[serverClass:test-githubaction-uf:app:org_all_forwarder_outputs]

restartSplunkd = false

stateOnClient = enabled

[serverClass:test-githubaction-uf:app:org_dept_app_inputs]

restartSplunkd = false

stateOnClient = enabledNotice the stateOnClient is ‘enabled’ for the universal forwarders (UFs) and ‘noop’ for the cluster manager (CM). Also allow-listing of clients in the above example is simplified. You have much more filtering options at your disposal like in the UI. If you are looking for more filtering options, I have linked to the Splunk Docs page in the Useful Links section at the bottom.

Ok now we have the serverclass.conf defined and also the steps added to the push2ds.yml workflow to sync serverclass.conf to the $SPLUNK_HOME/etc/system/local of the deployment server. But just placing the serverclass.conf in the deployment server isn’t enough, you need to either restart the deployment server or more elegantly do a ‘reload’ of the serverclasses that we just added/modified.

In case you aren’t familiar with Splunk CLI, we can do a reload of all serverclasses like so

$ $SPLUNK_HOME/bin/splunk reload deploy-server

And we can reload a targeted serverclass like so:

$ $SPLUNK_HOME/bin/splunk reload deploy-server -class <serverclass>

The only limitation to this is you can only target either one specific serverclass using -class key or all serverclasses at a time by not specifying the -class key, but not multiple named serverclasses.

With that in mind we use another GitHub Action from the marketplace SSH Remote Commands (appleboy/ssh-action@master). This action is specifically to execute single or multiple commands on a remote host over SSH. So to use this workflow we add a step to the bottom of the push2ds.yml like so.

Here you see a new subsection called ‘env:’ to add a few environment variables. I defined three environment variables

- SERVERCLASS – that holds the serverclass you need to reload. Note that for the first push, you need to reload the entire

serverclass.conffile, just leave it blank. Otherwise, just mention the serverclass you need reloaded after the-classkey. Recall that you can only reload one specific serverclass at a time. So in case you have multiple serverclass that needs to be updated, it is recommended that each ones are pushed to the repo as separate commits. In our example,test-githubaction-cmandtest-githubaction-ufwill be updated in this variable and pushed separately. - DS_SPLUNK_USER – this is the administrator username to authenticate the reload API request passed as a parameter value after the

-authkey. This is because sometimes the reload command may prompt for authentication and this will pre-emptively authenticate every timereloadis executed. - DS_SPLUNK_PWD – Same explanation as above for the administrator password. But notice that this obviously cannot be plain text and hence the use of GitHub Secrets. Remember to create the secret before using it here.

Next in the ‘with:’ subsection, once again we declare the environment variables defined above that will be used in the script against the ‘envs:’ which are the above three. The last attribute is the ‘script:’ which actually has the command that needs to be executed. Notice that the use of pipe (|) provides for adding multiple commands to be added underneath. In our case, we are just running a single command to reload serverclass.

Before committing this, let me quickly highlight a previously discussed limitation with this set up, that should serve as a strict word of caution. Recall that each merge to the repo is going to either trigger a reload of exactly one serverclass or a reload of all serverclasses depending on the value updated in the SERVERCLASS environment variable. So before pushing each commit or merging each PR to the main branch, review the SERVERCLASS variable in push2ds.yml file every time without fail to ensure that you are only reloading the serverclass you intend to reload.

Let’s commit and push these changes to our test-deploy-ds GitHub repo. It should trigger and finish the workflow like so:

Repeat the same for test-githubaction-uf serverclass as well and we can verify at the deployment server and clients like so:

Notice that both serverclasses are created in the deployment server and the attached apps are deployed to the respective clients as expected.

Conclusion

With all pieces put together, now we have a fairly robust framework for administering apps from deployment server not only to the forwarder endpoints but to indexers and search heads as well; all managed right from an upstream version control system like GitHub without even logging on to the deployment server. By giving users or other admins permission to submit pull requests to create/update/delete configurations in Splunk apps, you, the Splunk admin, have greater control over what gets deployed in your Splunk environment while being able to empower other users to submit new Splunk apps targeted at specific clients or modifications to existing ones.

Useful Links

Moving bits around: Deploying Splunk Apps with Github Actions

Splunk Deployment Server: The Manager of Managers

Use serverclass.conf to define server classes

Define filters through serverclass.conf

SSH Remote Commands – GitHub Action

Github Action for Rsync – rsync deployments

© Discovered Intelligence Inc., 2022. Unauthorized use and/or duplication of this material without express and written permission from this site’s owner is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given to Discovered Intelligence, with appropriate and specific direction (i.e. a linked URL) to this original content.